The world is on the cusp of a generative AI revolution, thanks to the advancements in artificial intelligence (AI) and machine learning. Generative models can create new and unique content such as text, images, and audio in a manner that mimics human-like style and content.

This exciting new technology is being used in a variety of applications, from video game development to medical research.

The above passage was written entirely by Open AI’s ChatGPT, which drew over 1 million users within just five days of launching in late November, making it one of the fastest-growing online platforms in the world.

ChatGPT can hold conversations and write software code and essays, among many other impressive capabilities.

From videos to books and customizable stock images and even cloned voice recordings, one can now create an infinite amount of content in seconds with the latest generative AI technology.

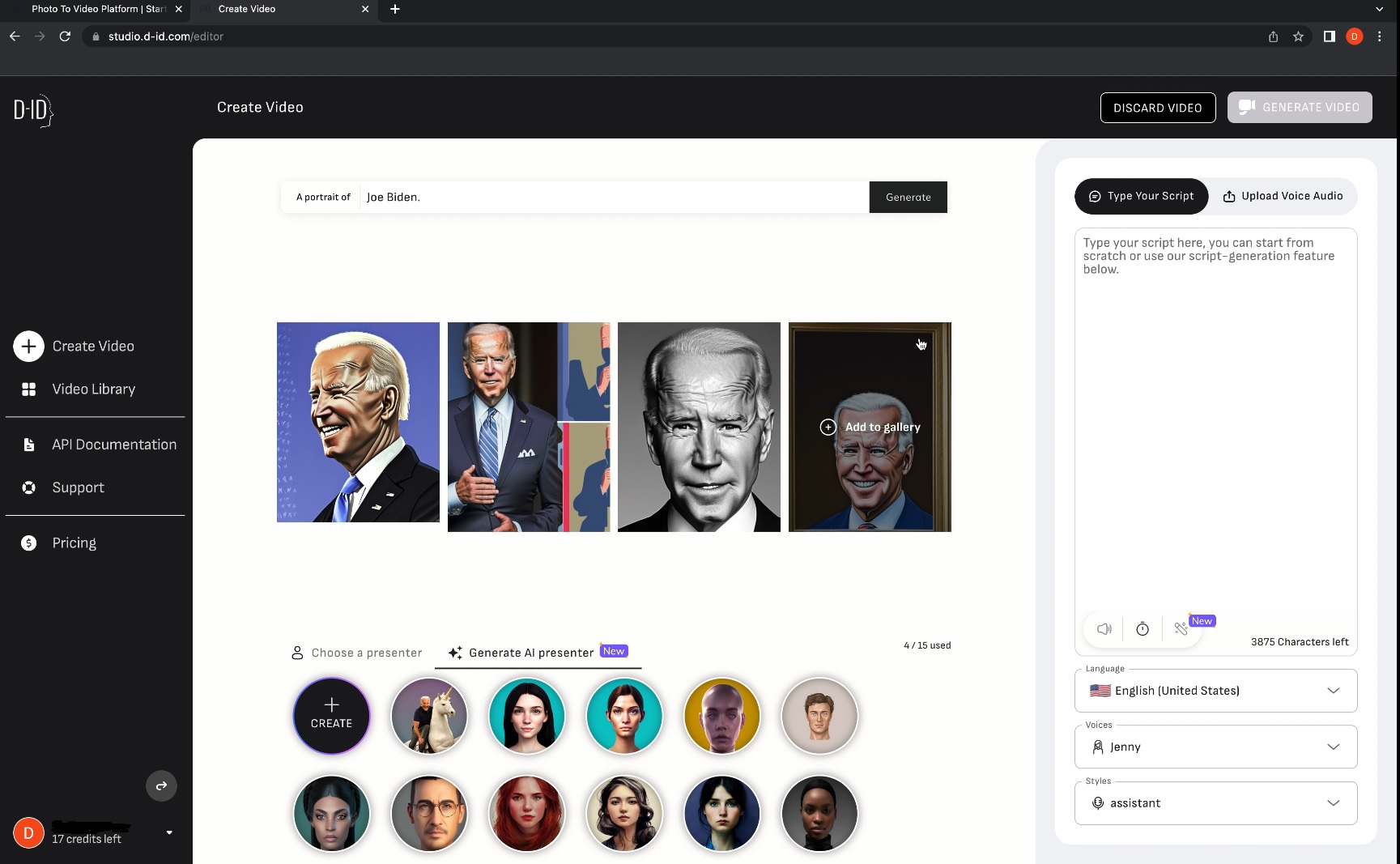

Tel Aviv-based company D-ID’s Creative Reality Studio, which launched last month, enables users to make videos from still images. You can generate an AI avatar or use your own photo, input a script (written by a human and/or an AI), and then the platform does the rest.

We asked D-ID’s Creative Reality Studio to generate avatars of US President Joe Biden in order to see if the platform could be used to spread misinformation. None of the avatars the AI produced was realistic enough for such purposes. (Screenshot)

Now, D-ID is working with a range of different clients, including MyHeritage, Warner Bros., and Mondelez International.

“With generative AI we’re on the brink of a revolution,” Matthew Kershaw, vice president of commercial strategy at D-ID, told The Media Line. “People are just learning and starting to realize how it can be used. It’s going to turn us all into creators. Suddenly instead of needing craft skills, needing to know how to do video editing or illustration, you’ll be able to access those things and actually it’s going to democratize that creativity.”

Kershaw believes that in the near future, people will even be able to produce feature films at home with the help of generative AI.

This is how it works: The AI is trained with massive data sets, made up of pictures, art, literature, and music. It then uses these data sets to produce something new.

Professor Yoav Shoham, co-founder and co-CEO at AI21 Labs. (Wikimedia Commons/Arielinson)

“Imagine that somebody, or in this case something, went and read a lot of stuff,” Professor Yoav Shoham, co-founder and co-CEO at AI21 Labs, told The Media Line. “By a lot of stuff, I mean everything that’s ever been written – the whole web, all the books that are online – and based on that learned to predict patterns.”

Located in Tel Aviv, AI21 Labs is a world leader in Natural Language Processing (NLP) technology.

They have three AI products available on the market today: AI21 Studio, which allows developers to build their own NLP-based apps and services; Wordtune, a personal AI writing assistant, and Wordtune Read, which summarizes long documents.

Shoham, who is also a professor emeritus of computer science at Stanford University, succinctly described generative AI as being “the idea that you put in a little and you get back a lot.”

The United States is currently leading in the generative AI arena, followed by Israel and Canada, he said.

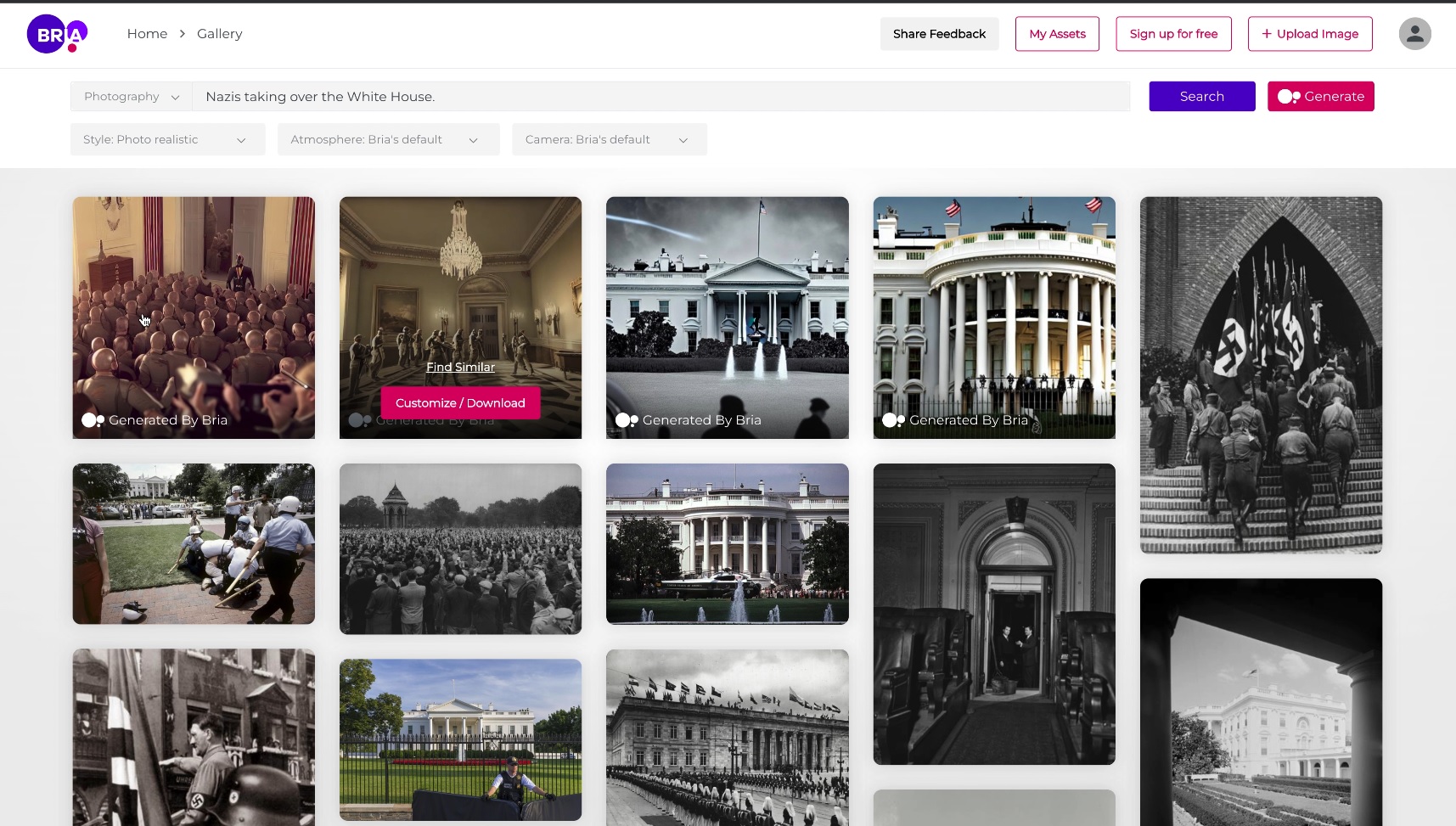

We asked Bria’s AI to generate a “fake news” image of Nazis taking over the White House, but none of the results would be usable in a news context. (Screenshot)

Several other Israeli firms have also thrown their hat into the generative AI ring, including Bria.

The Tel Aviv startup’s state-of-the-art platform allows users to make their own customizable images, in which everything from a person’s ethnicity to their expression can be easily modified. It recently partnered with stock photo image giant Getty Images.

“The technology can help anyone to find the right image and then modify it: to replace the object, presenter, background, and even elements like branding and copy. It can generate [images] from scratch or modify existing visuals,” Yair Adato, co-founder and CEO at Bria, told The Media Line.

Yair Adato, co-founder and CEO at Bria. (Maya Margit/The Media Line)

Founded in 2020, Bria is targeting the B2B – or business-to-business – space and hopes to transform the way marketing and e-commerce teams work.

“The idea is to help [businesses] be much more efficient, independent, and productive when they create the visual they need,” Adato explained.

The Rise of AI-generated Fake News

Although generative AI is still in its infancy, it has already shown that it has amazing potential to revolutionize the way we work, create and analyze data.

But it also has opened a can of worms when it comes to a number of ethical issues.

In a world where every image, video, and text can be made at the click of a button, how will we be able to distinguish between what is real and what is synthetic?

For example, fake news and AI-generated TV reports could become part of our everyday media landscape.

“The issue of knowing what’s true and what’s not true, what’s an opinion and whose opinion it is I think is key,” AI21 Labs’ Shoham said. “I think so much of what’s happening in the world now is being destabilized by having all of these firm knowledge foundations we had being undermined. To me, that’s one of the biggest challenges.”

The rise of synthetic media has made it easier than ever to produce deepfake audio and video. Microsoft researchers, for instance, recently announced that their new AI-based application can clone a person’s voice with just seconds of training. Called VALL-E, the app simulates a person’s speech and acoustic environment after listening to a three-second recording.

Some widely available generative AI apps and platforms allow users to produce any kind of content they want – whether it be racist, violent, or benign. Others, however, have put in place safeguards to prevent users from producing harmful content or spreading misinformation.

Both Bria and D-ID block users from uploading images of celebrities, or generating visuals that might contain offensive imagery, for example. Their content also is watermarked so that people will know it is AI-generated.

“We put in place a lot of measures to ensure that the user who’s viewing this content knows it’s been created by an AI,” D-ID’s Kershaw related. “We’ve had hundreds of millions of videos created and I can count on one hand the number of issues we’ve had.”

Matthew Kershaw, vice president of commercial strategy at D-ID. (Maya Margit/The Media Line)

To test their systems out, we asked D-ID’s AI to generate avatars of US President Joe Biden that could then be used in videos. None of the results was realistic.

We also tried to trick Bria’s system into making believable images of Nazis taking over the White House. Once again, the resulting photos would not be usable in a news context.

“We try to completely differentiate between visuals that reflect a memory, news event or something which really happened, and different types of visuals that tell a story that you use for marketing or for e-commerce,” Bria’s Adato confirmed. “If you try to do something that is a fake, our intention is to block it and not let you create something which you don’t have the rights to or that is [deceitful].”

Who Is the Author?

Deepfakes and misinformation aside, other ethical considerations have arisen in relation to this powerful new technology.

For example, who owns the rights to AI-created content, and will artists – whose works are sometimes being used to train AI models – be compensated? Will works created by AI be eligible for copyright protection?

There is also the risk that generative AI could displace human workers and make many jobs obsolete, especially as a growing number of roles become automated.

Nevertheless, D-ID’s Kershaw is optimistic that AI will not replace us.

“We’ve been automating everything for 100 years and yet we still have full employment and I think that this technology will be the same,” he said.

Others in the industry are adamant that, to the contrary, generative AI will lead to job growth and free up time for workers to become more productive than before.

“I think more interestingly for me are not the jobs that are replaced but the jobs that are empowered with strong tools that give you a research assistant at your side, or an editor at your side, and suddenly you as a creative content generator can be just so much more creative,” AI21 Labs’ Shoham said.

In any case, it seems that there is no stopping the revolution.

In fact, the global generative AI market is expected to reach $109.37 billion by 2030, according to a new report by Grand View Research.

“A lot of the tech industry at the moment is suffering a bit of a downturn and there have been quite a lot of layoffs but generative AI is one of the few patches of real growth,” Kershaw noted. “That is because not only is it really interesting from a technical point of view, but it has real-world applications which are just being realized.”

As the world leaps into this AI-driven future, it will become increasingly difficult to pinpoint the line between the real and the artificial.

When AI can do everything from writing books to creating art, making videos, and more, what role will humans play? What is the endgame of this innovative technology and how will it transform our world?

No one knows for sure, but in the coming years, AI will change our lives and perhaps even redefine reality as we know it.